In this article, let’s learn Caching! We will talk about Caching Basics, In-Memory Caching in ASP.NET Core Applications, and so on. We will build a simple endpoint that can help demonstrate setting and getting cache entries from the in-memory Cache. After that, we will set up another endpoint where we will see the noticeable performance improvement (over 80% faster) by using caching. Let’s get started.

What is Caching?

Caching is a technique of storing the frequently accessed/used data so that the future requests for those sets of data can be served much faster to the client.

In other terms, you take the most frequently used data, which is also least-modified, and copy it temporary storage so that it can be accessed much faster for the future calls from the client. This awesome technique can boost the performance of your application drastically by removing unnecessary and frequent requests to the data source.

Theoretically, this is how caching would work. Client 1 requests for some data and takes about 20 seconds to fetch. While fetching, we will also parallelly copy this fetched data to temporary storage. Now, when Client 2 requests for the same data, it would just take him less than 1-2 seconds before he gets a response.

What you are thinking right now is, What happens if the data changes? Will we be still serving an outdated response?

No, there are various ways to refresh the cache and also set the expiration time of the cache as well. We will talk in detail about this later in the article.

It is important to note that applications should be designed in a way that they never depend directly on the cached memory. The Application should use the cache data only if it is available. If the cache data has expired or not available, the application would ideally request the original data source. Get the point?

Caching in ASP.NET Core

ASP.NET Core has some great out-of-the-box support for various types of caching as follows.

- In-Memory Caching - Where the data is cached within the server’s memory.

- Distributed caching - The data is stored external to the application in sources like Redis cache etc.

As for this article, we will go in-depth about In-Memory Caching.

What is In-Memory Caching in ASP.NET Core?

With ASP.NET Core, it is now possible to cache the data within the application. This is known as In-Memory Caching in ASP.NET Core. The Application stores the data on to the server’s instance which in turn drastically improves the application’s performance. This is probably the easiest way to implement caching in your application.

Pros and Cons of In-Memory Caching

Pros

- Much Quicker than other forms of distributed caching as it avoids communicating over a network.

- Highly Reliable.

- Best suited for Small to Mid Scale Applications.

Cons

- If configured incorrectly, it can consume your server’s resources.

- With the scaling of application and longer caching periods, it can prove to be costly to maintain the server.

- If deployed in the cloud, maintaining consistent caches can be difficult.

Getting Started

Setting up caching in ASP.NET Core cannot get any easier. It is just a few lines of code that can improve your application’s feedback time by over 50-75% easily!

In-Memory Caching in ASP.NET Core is a Service that should be registered in the service container of the application. We will be using it with the help of dependency injection later on in this tutorial.

Let’s create a new ASP.NET Core 3.1 WebAPI Solution in Visual Studio 2019. After that, just navigate to the Startup.cs class and add the following line to the ConfigureServices method.

services.AddMemoryCache();This adds a non-distributed, in-memory implementation to the IServiceCollection.

That’s it. Your Application now supports in-memory hosting. Now, to get a better understanding of how caching works, we will create a simple endpoint that can set and get the cache. We will also be exploring the expiration settings for caching in ASP.NET Core.

Endpoint to Get / Set Cache in Memory

In the Controllers folder, add a new Empty API Controller and name it CacheController. Here we will define just 2 endpoints using GET and POST Methods.

The POST Method will be responsible for setting the cache. Now how cache works is quite similar to a C# dictionary. That means you will need 2 parameters, a key, and a value. We will use the key to identify the value (data).

The Cache that we set earlier can be viewed using the GET Endpoint. But this depends on whether the cache is available/expired/exists.

Here is how the controller looks like.

[Route("api/[controller]")][ApiController]public class CacheController : ControllerBase{ private readonly IMemoryCache memoryCache; public CacheController(IMemoryCache memoryCache) { this.memoryCache = memoryCache; } [HttpGet("{key}")] public IActionResult GetCache(string key) { string value = string.Empty; memoryCache.TryGetValue(key, out value); return Ok(value); } [HttpPost] public IActionResult SetCache(CacheRequest data) { var cacheExpiryOptions = new MemoryCacheEntryOptions { AbsoluteExpiration = DateTime.Now.AddMinutes(5), Priority = CacheItemPriority.High, SlidingExpiration = TimeSpan.FromMinutes(2), Size = 1024, }; memoryCache.Set(data.key, data.value, cacheExpiryOptions); return Ok(); } public class CacheRequest { public string key { get; set; } public string value { get; set; } }}Line 5 - Defining IMemoryCache to access the in-memory cache implementation.

Line 6 - Injecting the IMemoryCache to the constructor.

Let’s go through each of the methods.

Setting the Cache.

This is a POST Method that accepts an Object with key and value property as I have mentioned earlier.

MemoryCacheEntryOptions - This class is used to define the crucial properties of the concerned caching technique. We will be creating an instance of this class and passing it to the memoryCache object later on. But before that, let us understand the properties of MemoryCacheEntryOptions.

- Priority - Sets the priority of keeping the cache entry in the cache. The default setting is Normal. Other options are High, Low and Never Remove. This is pretty self-explanatory.

- Size - Allows you to set the size of this particular cache entry, so that it doesn’t start consuming the server resources.

- Sliding Expiration - A defined Timespan within which a cache entry will expire if it is not used by anyone for this particular time period. In our case, we set it to 2 minutes. If, after setting the cache, no client requests for this cache entry for 2 minutes, the cache will be deleted.

- Absolute Expiration - The problem with Sliding Expiration is that theoretically, it can last forever. Let’s say someone requests for the data every 1.59 minutes for the next couple of days, the application would be technically serving an outdated cache for days together. With Absolute expiration, we can set the actual expiration of the cache entry. Here it is set as 5 minutes. So, every 5 minutes, without taking into consideration the sliding expiration, the cache will be expired. It’s always a good practice to use both these expirations checks to improve performance. But remember, the absolute expiration SHOULD NEVER BE LESS than the Sliding Expiration.

Finally in Line 27, we are setting the cache by calling the Set Method and passing parameters like Key, Value and the created options class.

Retrieving the Cached Entry.

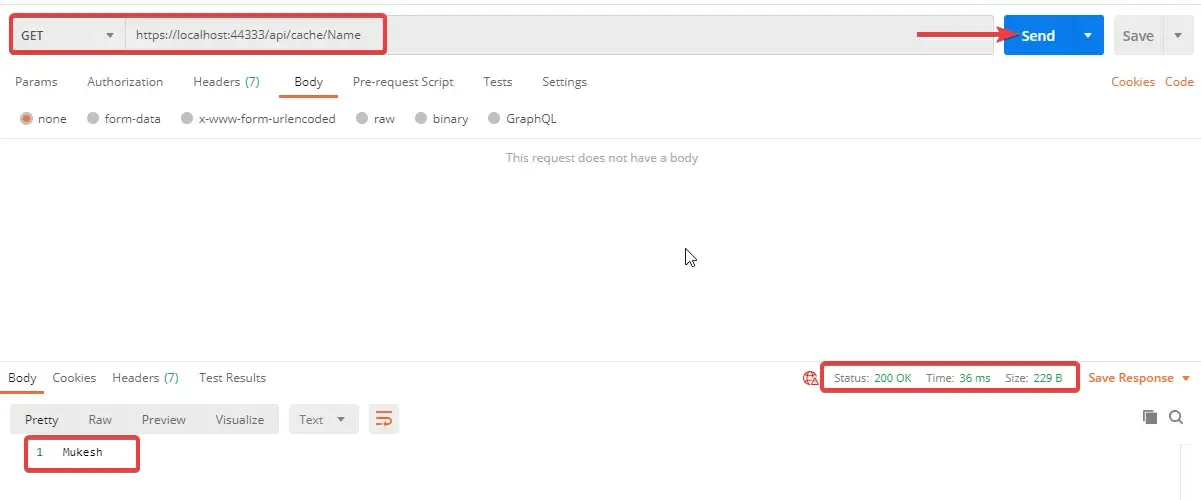

Imagine that we used the POST Method to set the cache. Let’s say our parameters were, Key = “Name” , Value = “Mukesh”. Once we set this entry, we will need a method to retrieve it as well.

GetCache method of the CacheController is a GET Method that accepts key as the parameter. It’s a simple method where you access the memoryCache object and try to get a value that corresponds to the entered key. That means, if you pass the key as “Name”, you are supposed to get “Mukesh” as your response.

That’s how Caching works. Let’s fire up postman and see it working.

Testing

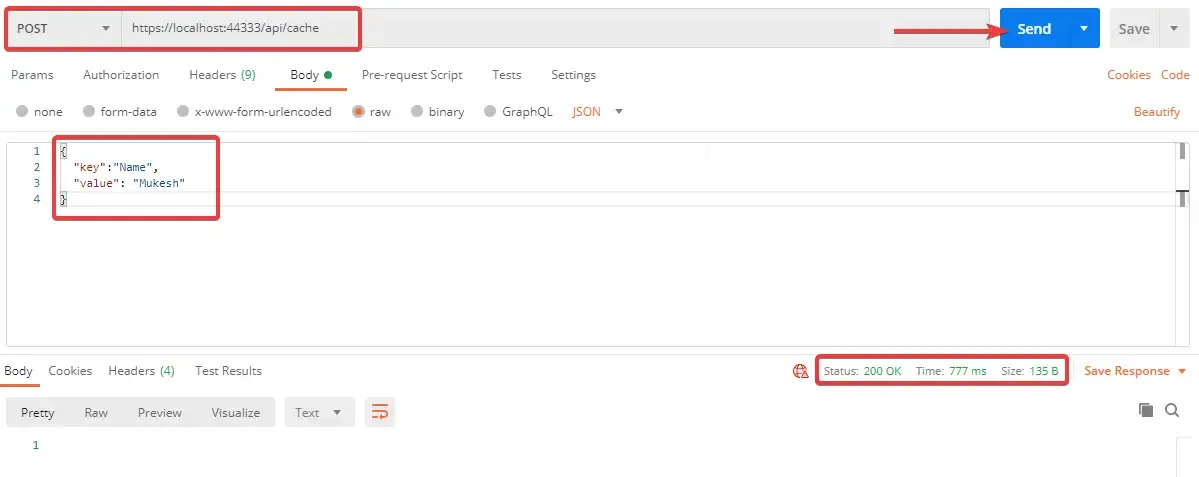

First, let’s set the Cache.

I have set the key to Name and Value as “Mukesh”. I will be invoking the ../api/cache POST Endpoint. Expected Reponse is a 200 Ok Status.

Once the Cache is set, let’s fetch the cache entry. We will be invoking the ../api/cache/{key} where the key is the one that you set earlier in the previous POST call. In our case, it was “Name”.

You can see that cache works as expected.

With this , I guess you got a pretty clear understanding on how caching actually works in ASP.NET Core.

Now, this is a very very simple implementation of caching which doesn’t make much sense on a practical level, right? So, let me demonstrate a practical implementation where you can use this caching in real-world scenarios.

Practical Cache Implementation

So, for testing purposes, I have set up an API and configured Entity Framework Core. This API will return a list of all customers in the database. If you need to learn about setting up Entity Framework Core in ASP.NET Core, I recommend you to go through this article - Entity Framework Core in ASP.NET Core - Getting Started

I have also added a few thousand sample records to mimic a real-world use case.

Here is the Customer Controller that has an endpoint to get all the customer records from the database.

[Route("api/[controller]")][ApiController]public class CustomerController : ControllerBase{ private readonly IMemoryCache memoryCache; private readonly ApplicationDbContext context; public CustomerController(IMemoryCache memoryCache, ApplicationDbContext context) { this.memoryCache = memoryCache; this.context = context; } [HttpGet] public async Task<IActionResult> GetAll() { var cacheKey = "customerList"; if(!memoryCache.TryGetValue(cacheKey, out List<Customer> customerList)) { customerList = await context.Customers.ToListAsync(); var cacheExpiryOptions = new MemoryCacheEntryOptions { AbsoluteExpiration = DateTime.Now.AddMinutes(5), Priority = CacheItemPriority.High, SlidingExpiration = TimeSpan.FromMinutes(2) }; memoryCache.Set(cacheKey, customerList, cacheExpiryOptions); } return Ok(customerList); }}Line 5 IMemoryCache definition

Line 6 ApplicationDbContext

Line 7 to 11 Constructor Injection

Line 15, Here we are setting the cache key internally in our code. Does not make sense to give this option to the user right?

Line 16 - If there is a cache entry with the key as “customerList”, then push this data to a List object. Else, if there is no such cache entry, call the database via the context object and set this value as a new cache entry along with the expiration parameters that we learned about earlier.

Line 27 - Returns the list of customers either from the cached memory (if available) or directly from thre database.

I hope that this part is clear. Let’s see some Postman results of this API endpoints.

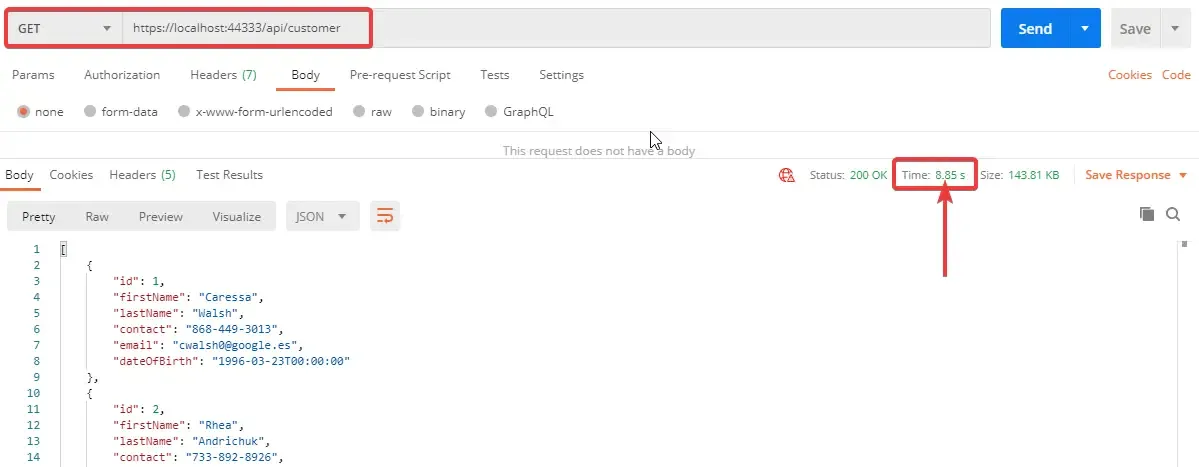

Here is my first API call which takes about 8 seconds for a few thousand record sets.

Now, theoretically, in the first call, we are directly calling the database, which may be slow depending on the traffic, connection status, size of the response, and so on. We have configured our API to parallelly store these records to the cache as well.

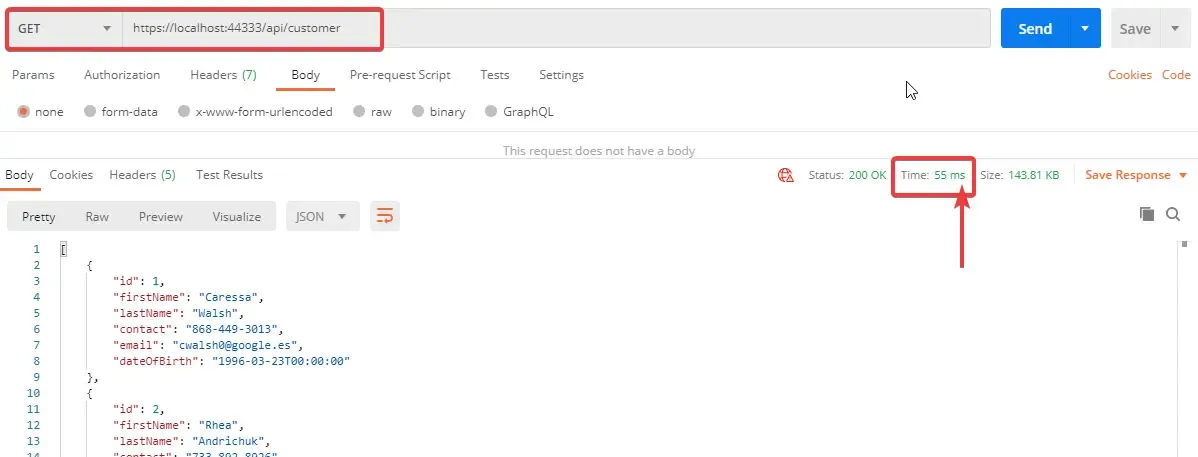

So in the second call, the response is expected at much more better time. Let’s say maybe under a second or two, yeah?

As you can see, our second API call takes well under 1 second, just 55ms which is pretty amazing compared to 8seconds. You can see the drastic improvement in the performance, yeah? This is how awesome Caching is.

Points to Remember

Here are some important point to consider while implementing caching.

- Your application should never depend on the Cached data as it is highly probable to be unavailable at any given time. Traditionally it should depend on your actual data source. Caching is just an enhancement that is to be used only if it is available/valid.

- Try to restrict the growth of the cache in memory. This is crucial as Caching may take up your server resources if not configured properly. You can make use of the Size property to limit the cache used for entries.

- Use Absolute Expiration / Sliding Expiration to make your application much faster and smarter. It also helps restricts cache memory usage.

- Try to avoid external inputs as cache keys. Always set your keys in code.

Background cache update

Additionally, as an improvement, you can use Background Jobs to update cache at a regular interval. If the Absolute Cache Expiration is set to 5 minutes, you can run a recurring job every 6 minutes to update the cache entry to it’s latest available version. You can use Hangfire to achieve the same in ASP.NET Core Applications.

Let’s wind up this article here. For the next article (which I will be linking here once available), we will talk about more advanced concepts of Caching Like Distributed Caching, Setting up Redis, Redis Caching and PostBack Calls, and much more.

Summary

In this detailed article, we have learnt about Caching, the Basics, In-Memory Caching, Implementing In-Memory Caching in ASP.NET Core, and a practical implementation as well. You can find the completed source code here. I hope you learnt something new and detailed in this article. If you have any comments or suggestions, please leave them behind in the comments section below. Do not forget to share this article within your developer community. Thanks and Happy Coding! ?